Today’s world is moving fastly towards using AI and Machine Learning in all fields. The most important key to this is DATA. Data is the key to everything. If as a Machine Learning Engineer we are able to understand and restructure the data toward our need, we would have completed half the task.

Let us try to learn to perform EDA (Exploratory Data Analysis) on data.

What we will learn in this tutorial :

- Collect data for our application.

- Structure of the data to our needs.

- Visualize the data.

Let’s get started. We will try to fetch some sample data — The IRIS Dataset which a very common dataset that is used when you want to get started with Machine Learning and Deep Learning.

- Collection of Data: The date for any application can be found on several websites like Kaggle, UCI, etc, or has to be made specific to some application. For example, if we want to classify between a dog and a cat we don’t need to build out a dataset by collecting images of dog and cat as there are several datasets available. Here let’s try to inspect the Iris Dataset.

Let’s fetch the data:

from sklearn.datasets import load_iris,

import pandas as pd

data = load_iris() #3.

df = pd.DataFrame(data.data, columns=data.feature_names)#4.

This (#3)will fetch the Dataset which sklearn has by default. Line #4 converts the dataset into a pandas data frame which is very commonly used to explore dataset with row-column attributes.

The first 5 rows of the data can be viewed using :

df.head()

The number of rows and columns, and the names of the columns of the dataset can be checked with :

print(df.shape)

print(df.columns)(150, 4)#Output

Index(['sepal length (cm)', 'sepal width (cm)', 'petal length (cm)',

'petal width (cm)'],

dtype='object')#Output

We can even download the dataset directly from UCI from here. The CSV file downloaded can be loaded into the df as :

df = pd.read_csv("path to csv file")

2. Structuring the Data: Very often the Dataset will have several features that don’t directly affect our output. Using such features is useless as it leads to unnecessary memory constraints and also sometimes errors.

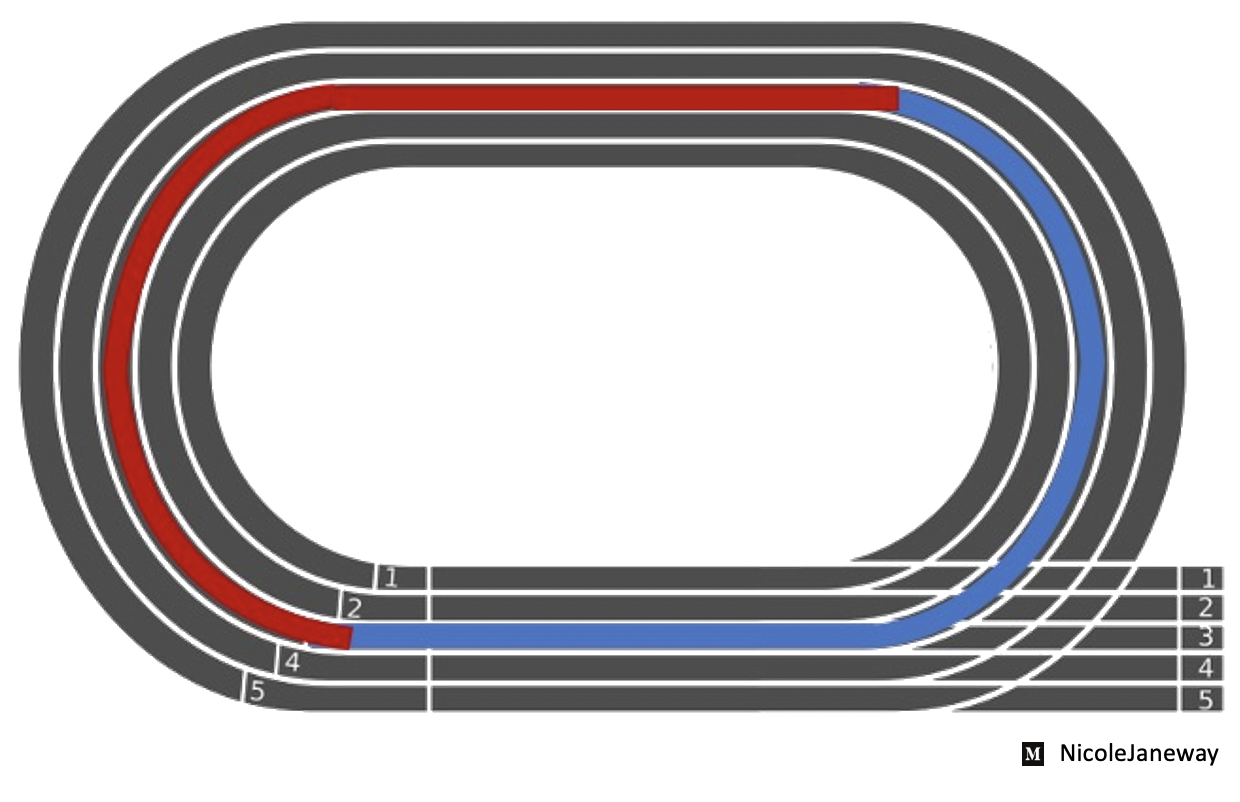

We can check which columns are important or affect the output column more by checking the correlation of the output column with the inputs. Let us try that out :

df.corr()

Clearly, you can see above the correlation matrix helps us in understanding how all features are affected by one another. For more information about the correlation matrix click here.

So if our output column was supposed sepal length (cm), my output y would be “sepal length (cm)” and my input X would be ‘petal length (cm)’,

‘petal width (cm)’ as they have a higher correlation with y.

Note: If ‘sepal width (cm)’ would have correlation -0.8, we would also take that as the correlation value though it is negative has a huge impact on output y (inversely proportional).

Note: The value of correlation in a correlation matrix can vary between -1(inversely proportional) and +1(directly proportional).

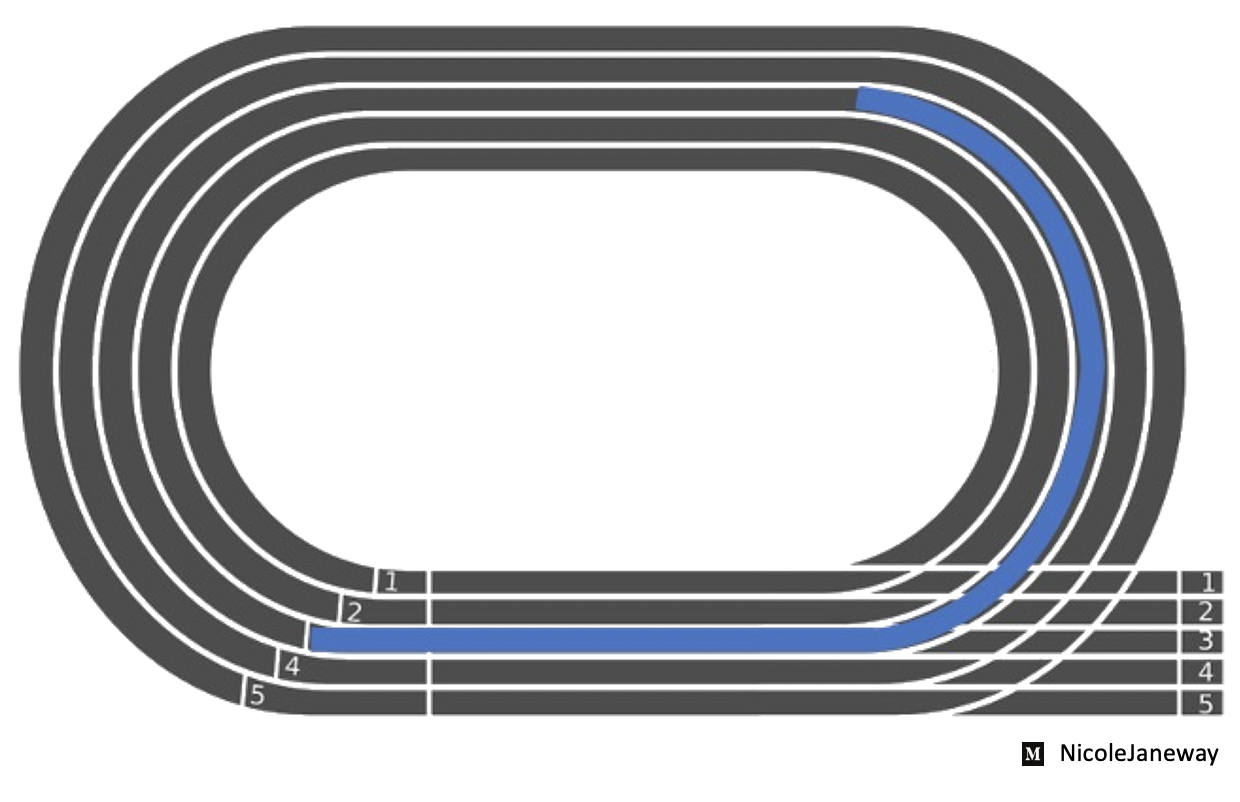

3. Visualize the Data: This is a very important step as it can help in two ways :

- Help you understand important points like how is the data split ie does it like close to a small range of values or higher.

- Helps to understand decision boundaries.

- Present it to people to make them understand your data rather than showing some tables.

There are several to plot and present the data like histograms, bar charts, pair plots, etc.

Let’s see how we plot a histogram for the IRIS dataset.

df.plot.hist( subplots = True, grid = True)

By looking into the histogram it’s easier for us to understand what is the range of values for each feature.

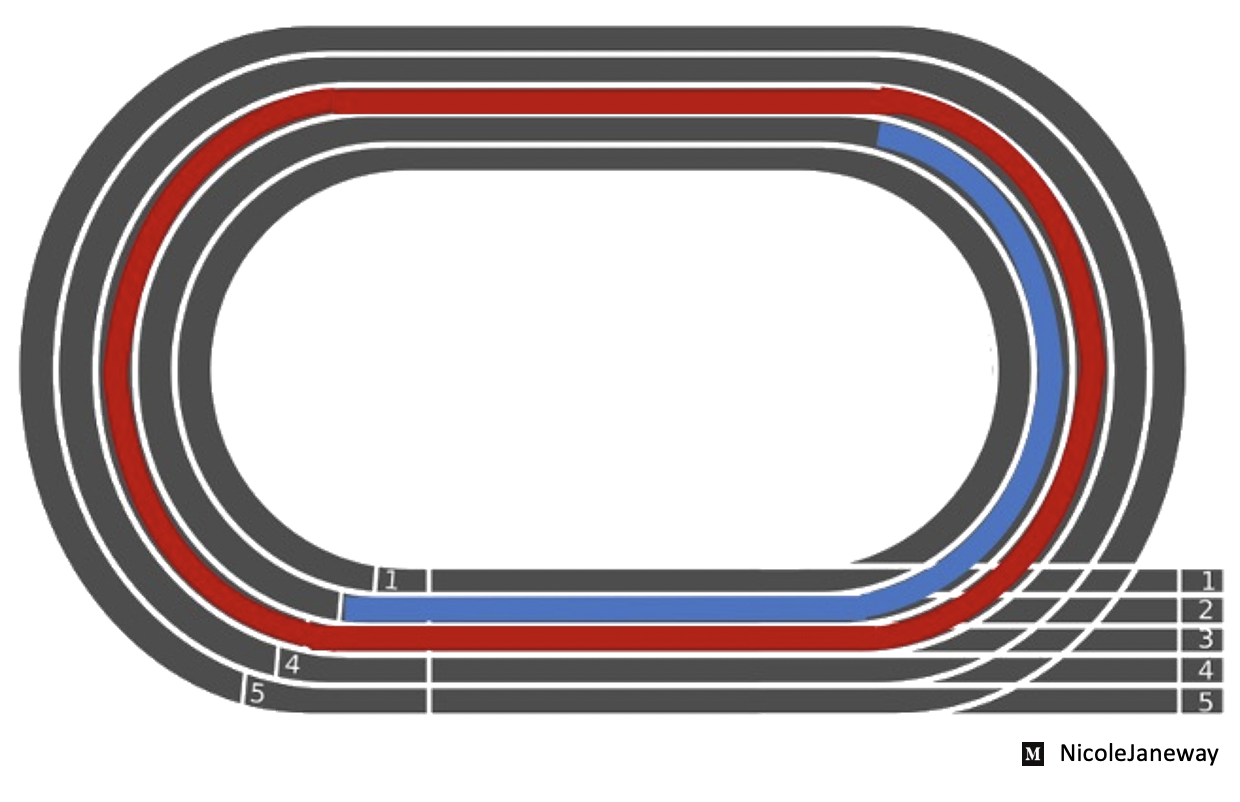

Let’s simply plot the data now.

df.plot(subplots=True)

Apart from these, there are several other graphs which can be plotted easily depending on the application.